Climate models give us a glimpse into the future. They provide a range of the best- and worst-case scenarios for what lies ahead and, in the process, supply stakeholders with crucial information that can then be used to inform further research and decision making processes.

Since the early 1990s climate modelling has been done largely in conjunction with the IPCC process through Coupled Model Intercomparison Projects (CMIP): rather than each modelling group producing their own scenarios on an ad hoc basis, they coordinate with each other and run climate models using the same set of inputs in terms of radiative forcing representative of different scenarios.

“One thing that is a little underappreciated by the larger community is that when we look at the various projections in future warming that the IPCC provides, they are based on CMIP that do concentration driven runs,” explains climate change researcher and modelling expert Zeke Hausfather, when talking to ClimateForesight. “Essentially, you take a set of different climate models and you have them all run the same concentrations of greenhouse gasses such as CO2, methane or nitrous oxide.”

Modellers do this because approximately one-third of climate models are still unable to include dynamic carbon cycling modelling. In other words, they can’t factor in the impact of emissions on atmospheric concentration levels in a dynamic way.

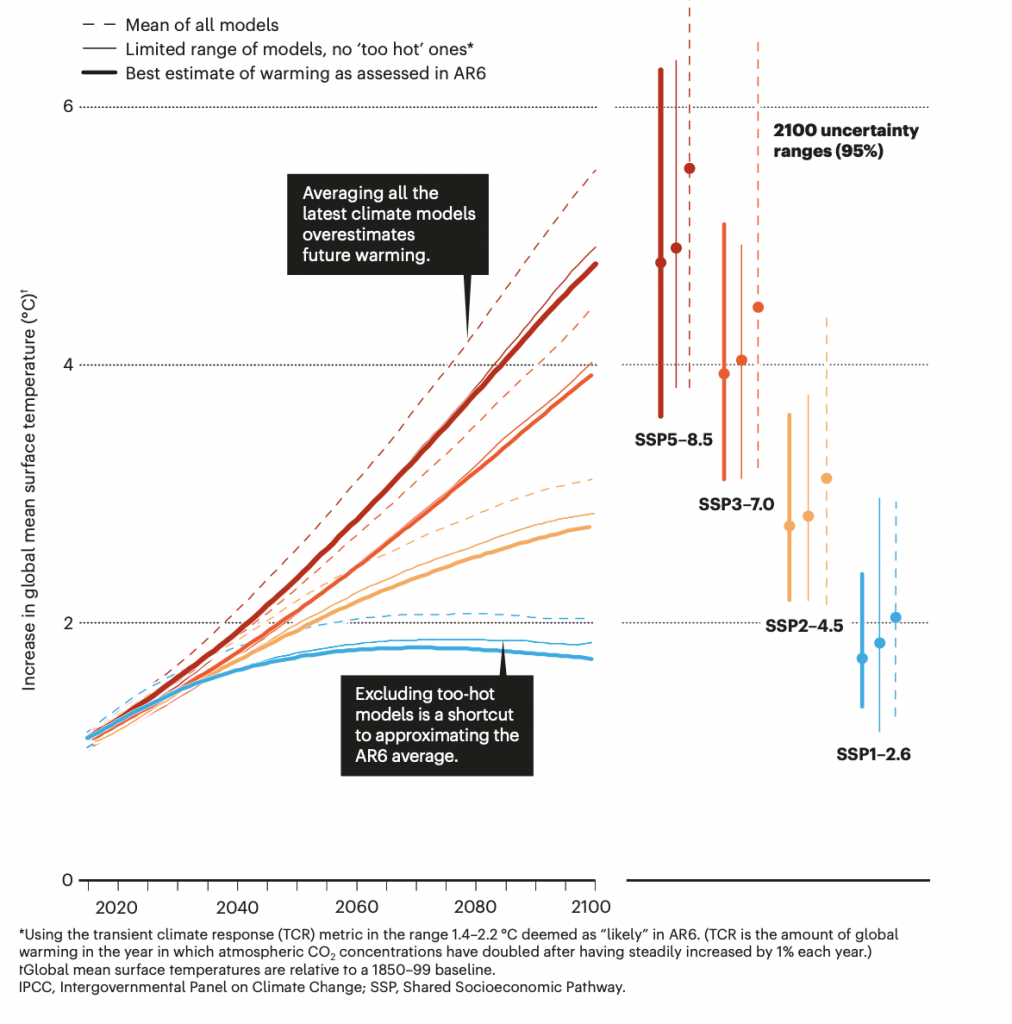

However, a subset of the latest generation of models is recognised as being “too hot” and therefore projects climate warming in response to carbon dioxide emissions that is larger than that supported by other evidence.

In fact, some of these models predict that a doubling of atmospheric CO2 concentrations could lead to global warming of over 5 degrees Celsius, a conclusion which is not supported by other lines of evidence and previous models.

Are new models too hot?

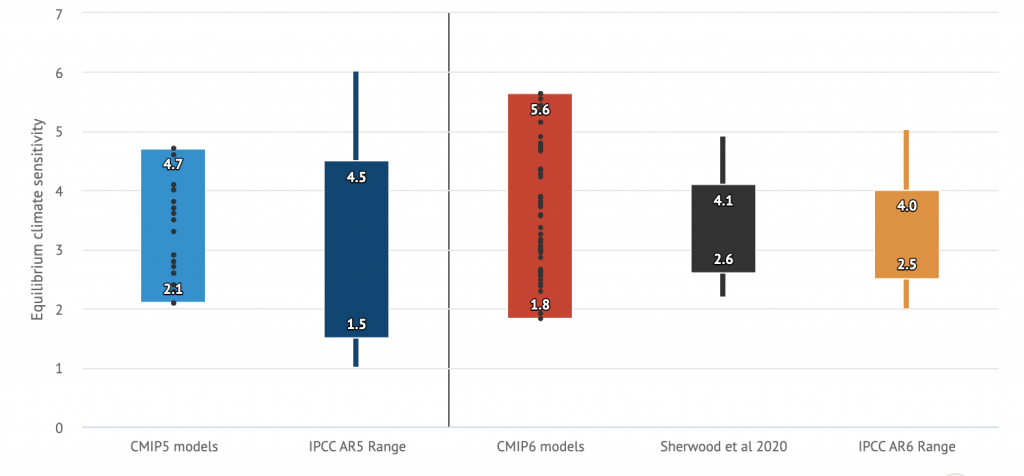

In previous CMIP rounds, preceding the most recent CMIP6, most models projected a climate sensitivity – understood as a measure of how much the planet warms in response to rising atmospheric carbon dioxide (CO2) concentrations – of anywhere between 2 and 4.5 degrees Celsius. In contrast, 10 out of 55 of the CMIP6 models have sensitivities higher than 5 degrees Celsius.

The result is that new studies using an average of these models, a common approach for researchers and one that was also used by the IPCC in Assessment Reports preceding the Sixth Assessment Report (AR6), run the risk of being biased.

For example, the recent paper “Avoiding ocean mass extinction from climate warming“, published in Science, uses the “intermodel averages” of CMIP6. The result? Under the high emissions scenario that the scientists modelled, where global temperatures would rise by about 5 degrees Celsius by the end of this century and by 18 degrees Celsius by 2300, warming will lead to a mass extinction of ocean species, an outcome that is considered to be extremely unlikely.

In response to this issue a new comment piece, entitled Climate simulations: recognize the ‘hot model’ problem, published in Nature, argues that using the average of all models and their range as the primary set of projections of future warming is no longer the most effective strategy.

“In the most recent round of modelling, CMIP6, a subset of about 20% of the climate models have very high climate sensitivity, whereby doubling CO2 concentrations would lead to 5 or more degrees Celsius increases,” explains Hausfather, who is lead author of the piece.

“We now have a conflicting situation. On the one hand, the new generation of models with this very hot subset is sort of pushing the overall average up. Yet, at the same time, there is broad scientific evidence that climate sensitivity can be narrowed down. Some of these very high sensitivity values of about 5 degrees Celsius, and the very low sensitivity values of below 2 degrees are, basically, highly unlikely,” says Hausfather.

In recent studies, the “hot models” have even been shown to be ineffective at reproducing past temperatures, a common method used to test their reliability and accuracy. This has cast further doubt on the model democracy approach, whereby all models are given equal weighting when establishing future warming parameters.

From model democracy to model meritocracy

The higher sensitivity of new models can also be related to an increase in their complexity. “As we increase components and therefore the number of poorly known internal model parameters, the accuracy can go down,” explains CMCC researcher Enrico Scoccimarro.

“However, this isn’t necessarily a problem. We just have to make small changes to the way in which we use the information that they produce,” he continues

The IPCC has already recognised the “hot model” issue and in the AR6 it found a way around the issue by starting to weigh the importance of different models. “The IPCC did this very unusual thing, they sort of killed model democracy. As researchers we need to really understand this and start taking it into account, otherwise we will start to have a big disconnect between what the IPCC and other studies project in terms of future warming outcomes,” says Hausfather.

There are two main ways of moving from model democracy to model meritocracy. The first is to give different weighting to different models depending on how well they compare with other lines of evidence, such as observational constraints on global mean warming.

In AR6, the IPCC rated models on their skill at capturing past historical temperatures and then used the best models to arrive at the final “assessed warming” projections for different fossil fuel emissions scenarios.

This approach allowed for the use of “hot models” even if they reached said warming too fast. A tactic that is also used by Scoccimarro in his research on extreme weather events: “The IPCC’s approach goes above the concept of model democracy and starts giving different importance to different models. If there are ten models, they don’t get equal weighting. It becomes a model meritocracy.”

The second change in methodology involves researchers steering away from time-based scenarios and focusing on the impacts of any given level of temperature increase, regardless of when that occurs.

“Now when I study how extreme events may change in the future, I will approach the issue by looking at what happens when temperatures change by a given amount rather than when this will happen. In this way I can continue to use as many models as possible without being affected too much by the ‘hot models’,” continues Scoccimarro.

In this way, researchers focus on climate outcomes at different global warming levels regardless of when these levels are reached. “The way you do that is you essentially take all the climate models and just sample what the world looks like for each model when it reaches four degrees, even if they reach four degrees at different moments depending on their climate sensitivity,” explains Hausfather. “It turns out that the impacts are very similar, no matter when four degrees is reached at least for most systems, and for surface impacts in particular the time element is less important than you might think. This is one solution that allows us to use all the climate models, including the high sensitivity ones.”

One potential drawback of this approach is that it may not give enough information about warming trajectories, something that policymakers, for example, value greatly. “If for some reason the warming trajectory — rather than just the global warming level — is important for a particular climate outcome, researchers should focus on what the most recent IPCC report defines as the likely sensitivity range, whose average is very similar to the IPCC’s assessed warming projections,” explains Hausfather.

“If I am looking at how a given temperature change will affect a certain process or interaction, whether this happens in 2090 or 2100 is not that important to my research. However, if we then have to inform stakeholders of given impacts by a given date we should use a narrower selection of models,” confirms Scoccimarro.